Memory Malware Part 0x1 — Introduction to the binary world

Knowledge is power… however, what good or evil may come ahead solely depends upon an intention ×_×

Unlike the previous article series on Malware engineering (where we focused on disk-based infection strategies on Linux platforms), in this series of articles we plan our ways considering memory as a playground. If done right, it could provide far better stealth than disk-based infections as the system tends to forget everything (wipes out volatile RAM) on every reboot (we can wait until NVRAM become prevalent). People carrying a fundamental understanding on binary analysis may choose to skip over to next part as this article is an introductory one which doesn’t talk about any particular technique used by malware rather it serves as a foundation that should be able get someone started with malware research independently.

Even though the article series is written in the below mentioned chronological order, feel free to skip to whatever interests you more.

- Memory Malware Part 0x1 — Introduction to the binary world

- Memory Malware Part 0x2 — Crafting LD_PRELOAD Rootkits in Userland

- Part 0x3 —Focuses on crafting a simple memory injector to elucidate the idea behind process injection on Linux.

NOTE : The topics covered in this article are by no means an exhaustive list of prerequisites for this domain of research but enough for what’s coming ahead.

Prerequisites

- Endless curiosity and never giving up attitude more than anything.

- Well, some parts of this article assumes a basic understanding of ELF file format. For a detailed information, you may want to read ELF specifications.

[Source Code]: CPU, do you ever feel me ?

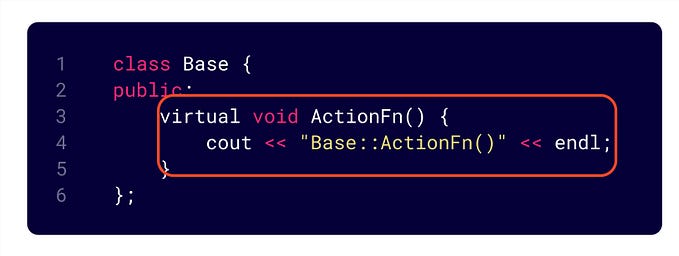

For most of the people dealing with code, the job ends at writing source code, getting a correct output, party as a monster and sleep like a baby. But now that we are stepping in the world of malware, it is important to note that source code is nothing more than ASCII encoded characters held together in a file. Source code is totally insignificant to the machine until it gets converted into something that CPU itself can understand (binary encoding). Every processor has its own unique language known as assembly (which comprises of the instruction set) that can be learnt to communicate directly with the CPU. Below is an example of source code and corresponding assembly instructions (blue highlights in INTEL syntax) along with hexadecimal encoded bytes that makes sense to CPU (green highlights).

For instance: A statement as simple as int a = 5; as shown above (highlighted with orange) doesn’t make any sense to the CPU until it is converted into c7 45 fc 05 00 00 00 (raw hex bytes in pink highlight) which when provided to an Intel x86–64 CPU will get interpreted as mov DWORD PTR [rbp-0x4],0x5 instruction (having an effect of storing 5 at memory location[rbp-0x4] i.e. the memory location of variable a).

What we plan to do is — somehow inject our intentions (green highlights) into target process memory and trick the CPU into executing them. Remember, all this is being done on behalf of a benign target process. For those interested in digging deeper towards the process of writing injectable code (i.e. code suitable for injection into memory), can refer Shellcoding series.

From Source Code to a Process and Beyond !

Well, making a CPU feel something is quite a task. Now, at least we know that source code needs to be converted into a binary program in order to get executed. But how does this conversion process actually goes ?

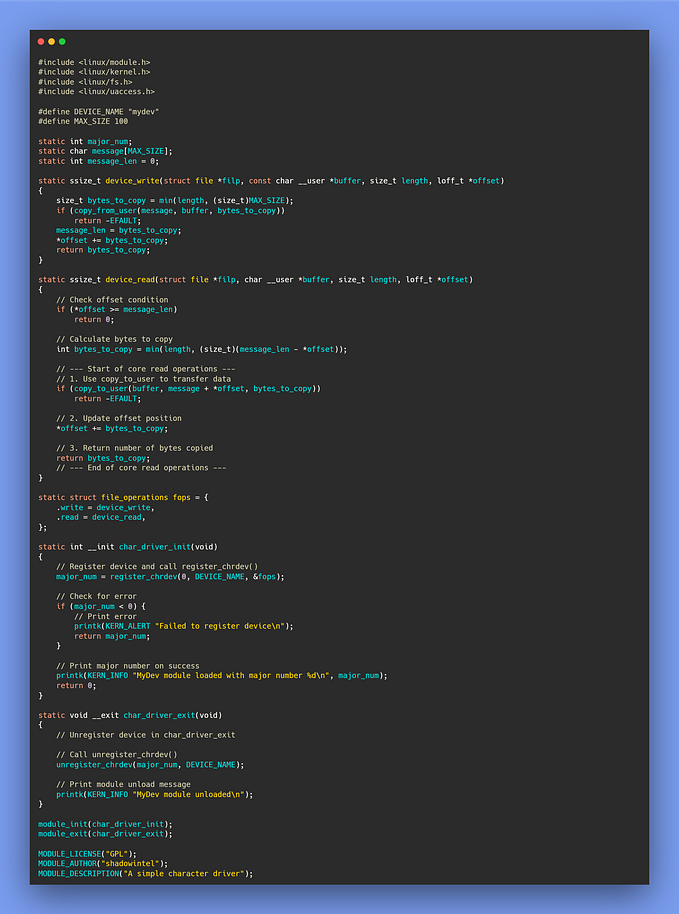

Compilation

The source code stored in a file is first given to a translator program (a compiler assuming we know C or C++). The translation is performed mainly under 4 stages each of which is handled by the respective components of compiler toolchain. I’ll discuss each of the stage briefly but I insist you to use a disassembler to analyze output of each stage and have clarity over it.

- Preprocessing— This stage processes any inclusion directives, conditional compilation instructions and macro definitions after which it generates a preprocessed output. Use

-Eflag with GCC to generate preprocessed output. - Compilation — This stage (strangely named) takes a preprocessed source code as input and generates an assembly language source (specific to the processor architecture it is being compiled for) file as output (*.s). Here the constant and variables will retain their names, i.e. their symbolic names are preserved. Use

-S -masm=intelflag with GCC to generate a beautified (intel syntax) assembly source output. - Assembling — In this stage, the assembler takes in an assembly source code (from previous stage) and converts it into object code (machine code), i.e. a file consisting of raw bytes (green highlights shown above). The object file produced is known as relocatable binary (*.o). The output will have all the relocation information (explained in more details ahead) and no symbols/references are resolved yet. Use

-cflag with GCC to generate a relocatable object code as output. - Link editing — As a matter of fact, relocatable binaries doesn’t have an entry point address since the object code needs to be linked against some extra code (libraries) before it can be executed. This stage is responsible for a compile-time linking (by

/usr/bin/ld— the GNU Linker), which involves linking one or more object files (*.o) into a single binary program (an executable or shared object ). The compile-time linking assigns addresses (or offsets if PIC is enforced) to function names (code symbols), variables and constant names (data symbols). Not all the symbols are resolved though, it leaves the resolution of external symbols (dynamic symbols like shared library function names) to runtime liker a.k.a dynamic linker or program interpreter (which we’ll talk about in no time). Use-oflag with GCC to link relocatable objects into single (ready-to-execute) binary program.

Let’s create a file named backbencher.c that includes the content bellow.

#include <stdio.h>

int main(void)

{

printf("hello hell x_x"); return 0;

}Now, to compile this file into a binary executable, type the following command on command line — gcc backbencher.c -o backbencher . Here gcc (GNU C Compiler) will perform all 4 stages described above and generate the final binary named backbencher .

It is very helpful to know the compilation process while working on disk based infections but it is not a necessity in understanding the stuff that comes ahead. Therefore, I had to limit our discussion for this topic.

Tracing System Calls

Concluding the hard work done by a compiler, we get a ready-to-execute ELF binary program consisting of instructions, data and metadata (to organise the instructions and data) in no time. Apart from first 16 bytes (e_ident[] field of ELF header), all the information is present as bytes in processor-specific encoding. Now, operating system is responsible for getting this program executed by the CPU. There is an eligibility criteria though, that is — for any program to get executed by the CPU, it must reside in main memory (RAM). A program, therefore needs to be brought from disk to the main memory in order be eligible for execution.

Assuming we are on Linux platform using /bin/bash as our shell to execute commands. Using /usr/bin/strace we can trace all the system calls issued by the backbencher which is a shared object ELF binary (see orange colored highlight below).

Loading, please wait… x_x

- When we enter a command

./backbencherto run the program, shell performs an execve() system call (known as the loader which is part of the kernel). Kernel locates the PT_INTERP segment of invoked program and creates an initial process image from program interpreter’s file segment. It is then the responsibility of program interpreter to map the invoked binary —./backbencherfrom disk into memory by parsing the invoked binary’s program header table. This process of getting a program from disk into memory is known as loading. (See the blue highlight above) - NOTE: Layout of the process address space for

./backbencherprogram is decided by its Program Header Table (since PHT is a component that is responsible for providing the execution view of an ELF binary) - After the program (code and data) is loaded into memory, the kernel maps the runtime/dynamic linker a.k.a program interpreter (present as a shared object/.so) and transfers control to it, passing sufficient information (argc, argv, envp and auxv pushed onto the stack segment) to continue further execution of the application binary.

Execution Takeover by Dynamic Linker

The ELF binary specifications allows us to specify a program interpreter (a.k.a dynamic linker) that is expected to know the ELF binary format as well as to carry out the flow of execution once the loader completes its job. Path to the program interpreter is stored in the .interp section (of the ELF binary on disk) that is mapped under the first loadable segment having a PHT entry type PT_INTERP. Usually, the dynamic linker is present at — /lib64/ld-linux-x86–64.so.2 and should not be confused for /usr/bin/ld (which is the a program used for compile-time linking) both of which deals with symbol management. Looking at the strace output, after execve() syscall, dynamic linker does the following—

- First it handles its own relocation (don’t forget that dynamic linker itself is a shared object).

- Then it performs an

access(“/etc/ld.so.preload”, R_OK)syscall checking its ability to read (R_OK) the file “/etc/ld.so.preload”. This file contains list of ELF shared objects to be loaded before any other dependency/shared library. (See red highlight). - The dynamic linker now recursively performs dependency resolution. It looks for the program’s dynamic segment to find and build a list of all DT_NEEDED entries (shared object dependencies) which it loads into the process address space recursively after which it performs relocation on the loaded dependencies.

Starting from the openat() syscall — see the grey colored highlight above, where libc.so.6 (standard C library .so) is opened and in later syscalls mapped into process address space ofbackbencherby the dynamic linker.

Information about dependencies is present in .dynamic section of the binary, and are marked as DT_NEEDED (value of d_tag field in struct ElfN_Dyn — see $ man 5 elf). Alternatively /usr/bin/ldd can be used to figure out all the dependencies of a program (having an added advantage of eliminating duplicates).

Note: The dynamic linker will consult the file /etc/ld.so.preload and load the shared objects specified in it before resolving any other SO dependency.

- It then, performs relocations on the invoked process’s memory with the help of relocation records.

- Performs the last-minute relocations — delay loading or lazy loading or lazy binding . As discussed in the 4th step of compilation process (Linker), some function symbols were left unresolved during compile-time linking stage which include symbols external to the binary (eg: symbols from shared library/.so). These

external function symbol resolutionis deferred until the first time the function call happens.

For example: Any reference to printf() would not be resolved/fixed up until the first time it gets called by the program since this function name (provided by libc.so) is a symbol external to the binary. These kind of symbols are also known as dynamic symbols (present inside the .dynsym section). The dynamic section is present only in dynamically linked ELF binaries.

You can use readelf to view the dynamic symbols of any ELF binary $ readelf — dyn-syms /program . printf being a dynamic symbol is highlighted in green bellow.

NOTE: The Delay loading part isn’t being shown by strace output and is introduced in this paper because it is leveraged both by virus and memory corruption exploits (later we’ll see how) to manipulate program’s behaviour at runtime. With so much of love and attention that it gets from attackers, it is supposed to be treated with respect.

- At the bottom of strace output,

write(1, “hello hell x_x”, 14) = 14(purple highlight in strace output) is the actual functionality of the program, where in the source code we usedprintf(“hello hell x_x”);which internally performs awrite()syscall writing 14 character string to file descriptor 1 (STDOUT). - At last an

exit_group(0)syscall (yellow highlight in strace output) is issued by the program corresponding to areturn 0;statement in the C source code and the process dies gracefully.

Wrapping Up !

At last a binary image looks something like this inside main memory —

EPILOGUE

This concludes some details on the binary life-cycle. Knowing about the binary file format (ELF for Linux or PE for Windows) is the key to understand existing and finding new new attack vectors. Understanding techniques and tricks used by malware is relatively easier to the one who holds a strong foundation. In the next article, we keep our first foot into the world of userland rootkits by discussing the most trivial yet decently effective technique leveraged by a Linux malware :)

Cheers,

Abhinav Thakur

(a.k.a compilepeace)

Connect on Linkedin